Curating Data Science: Flextable, M Bernstein, and Model Differently!

Week 48-2023 [Issue 16]

Welcome to The Repo! 🚀

Great work deserves to be shared. Especially in data science.

Each week, I'll curate three gems from the data science community:🗄️ Re: Remarkable Repository 💻 P: Prolific Programmer 🏢 O: Outstanding OrganizationI hope you find them as valuable and insightful as I do.

You can find all recommendations in the GitHub repository at finnoh/repo!

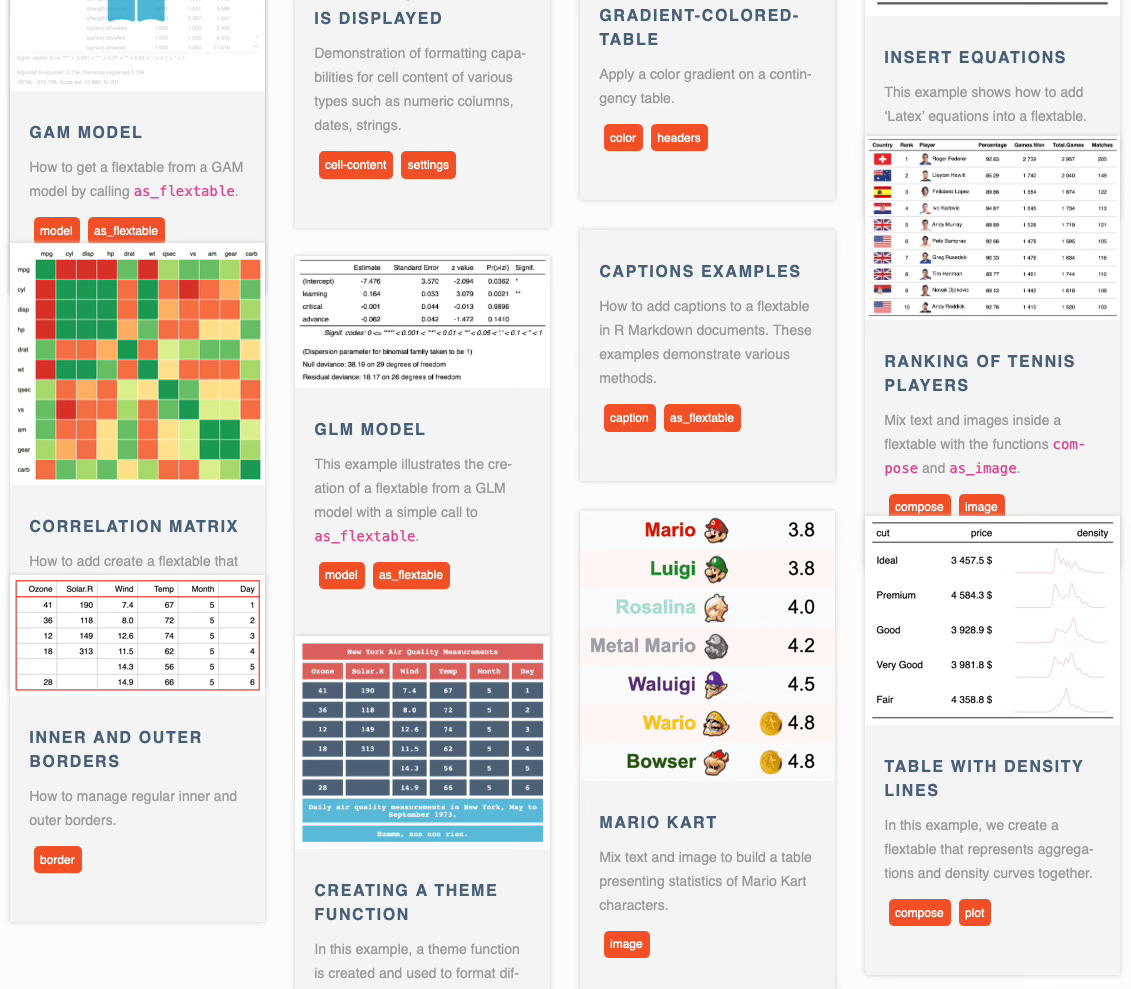

davidgohel/flextable

🗄️ Repository | The final word on tables?

According to my friend who told me about this package, flextable beats all other table packages by a long shot. I take his word for it.

Who wouldn’t when you can combine Latex, images, formatting, and even in-row plots into a single table and output these tables to all desirable formats?

The next time you create tables for a report, especially in Quarto, look at flextable. You can find the documentation here.

Consider leaving davidgohel/flextable a star! ⭐️

Matthew N. Bernstein

💻 Programmer | A Computational Biologists; great at math and art

On Matthew Bernstein’s blog, you can find a wide range of topics in the fields of computational biology, deep learning, graphs, probabilistic models, algorithms for statistical inference, quantities related to probabilistic models, probability, information theory, and linear algebra. A lot of tough topics.

I found his blog through his elaborate article on Variational Auto-Encoders. This post and many of his other articles, such as this one on measure-theoretic probability theory (of course a pretty casual topic) are very rigorous and insightful.

(Hint: If you are into art, you should also give his blog a look, I found his paintings impressive too.)

Thank you for your work Matthew Bernstein!

Model Differently

🏢 Organization | “All about data, from engineering to science.”

You will find a great variety of articles on Model Differently. On more typical posts for data science blogs, such as showcasing clustering methods, to more niche ones like “Time Series Analysis and Forecasting with FB Prophet Python”.

Initially, I stumbled across this blog through a post on LLMs, in more detail an explanation on how GPT-2 comes up with its next word predictions. I found this insightful and intuitive.